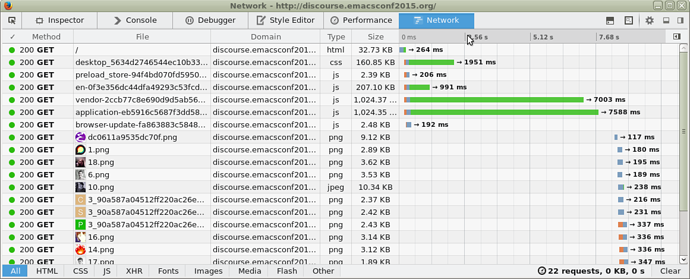

It sends about 2MB of javascript on first load, taking 7+ seconds on my not that slow DSL connection. Here’s a screen shot of network load time:

Discourse slow to load because of JS bloat

Gitit is using jquery and sending about 500k of js bloat, not as bad as 2 meg, but still bad.

I looked at the tcp streams for both discourse and gitit with wireshark, and neither of them is sending compressed. Is that an nginx config thing that can be fixed? I re-enabled external interfaces on gitit so I could connect from my browser to the happstack server on port 5001 and still got no compression, even though gitit is configured to compress.

Apparently discourse inside of docker has gzip enabled:

server {

listen 80;

gzip on;

gzip_vary on;

gzip_min_length 1000;

gzip_comp_level 5;

gzip_types application/json text/css application/x-javascript application/java

script;

According to firefox, the HTML is being compressed with gzip, but none

of the javascript is. The javascript is all minified, though. Is

gzipping javascript something people do? It’s about 2.5M uncompressed.

phr noreply@discourse.emacsconf2015.org writes:

I’ve never investigated this issue before but I’d sure WANT the js to be gzipped. The minified vendor-2ccb*.js file is 2.4MB uncompressed and 533K gzipped.

Added: actually another bit of weirdness. When I wget that file it’s 2.4MB but the firefox timing graph further up says it’s only 1.2MB.

I needed to enable gzip compression on the nginx proxy. I added this to the “http” block:

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.0;

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss application/javascript text/javascript;

gzip now works on all of our services. Thanks for bringing this up!

I wonder whether it can figure out to cache the gzipped js that’s coming from another server. The compute time for 500k of compressed output is non-trivial. Also we’ll need to check that it’s still happening once we get https working.

Added: I confirmed that discourse and gitit are both speedier now, and firefox network console reports both are compressed, reflected in the timings. I haven’t bothered wiresharking it. Thanks for fixing!!!